Zurichsee

Contents

# -*- coding: utf-8 -*-

# This is a report using the data from IQAASL.

# IQAASL was a project funded by the Swiss Confederation

# It produces a summary of litter survey results for a defined region.

# These charts serve as the models for the development of plagespropres.ch

# The data is gathered by volunteers.

# Please remember all copyrights apply, please give credit when applicable

# The repo is maintained by the community effective January 01, 2022

# There is ample opportunity to contribute, learn and teach

# contact dev@hammerdirt.ch

# Dies ist ein Bericht, der die Daten von IQAASL verwendet.

# IQAASL war ein von der Schweizerischen Eidgenossenschaft finanziertes Projekt.

# Es erstellt eine Zusammenfassung der Ergebnisse der Littering-Umfrage für eine bestimmte Region.

# Diese Grafiken dienten als Vorlage für die Entwicklung von plagespropres.ch.

# Die Daten werden von Freiwilligen gesammelt.

# Bitte denken Sie daran, dass alle Copyrights gelten, bitte geben Sie den Namen an, wenn zutreffend.

# Das Repo wird ab dem 01. Januar 2022 von der Community gepflegt.

# Es gibt reichlich Gelegenheit, etwas beizutragen, zu lernen und zu lehren.

# Kontakt dev@hammerdirt.ch

# Il s'agit d'un rapport utilisant les données de IQAASL.

# IQAASL était un projet financé par la Confédération suisse.

# Il produit un résumé des résultats de l'enquête sur les déchets sauvages pour une région définie.

# Ces tableaux ont servi de modèles pour le développement de plagespropres.ch

# Les données sont recueillies par des bénévoles.

# N'oubliez pas que tous les droits d'auteur s'appliquent, veuillez indiquer le crédit lorsque cela est possible.

# Le dépôt est maintenu par la communauté à partir du 1er janvier 2022.

# Il y a de nombreuses possibilités de contribuer, d'apprendre et d'enseigner.

# contact dev@hammerdirt.ch

# sys, file and nav packages:

import datetime as dt

# math packages:

import pandas as pd

import numpy as np

from scipy import stats

from statsmodels.distributions.empirical_distribution import ECDF

# charting:

import matplotlib.pyplot as plt

import matplotlib.dates as mdates

from matplotlib import ticker

from matplotlib.colors import LinearSegmentedColormap

import seaborn as sns

# home brew utitilties

import resources.chart_kwargs as ck

import resources.sr_ut as sut

# images and display

from IPython.display import Markdown as md

# set some parameters:

start_date = "2020-03-01"

end_date ="2021-05-31"

start_end = [start_date, end_date]

a_fail_rate = 50

unit_label = "p/100m"

a_color = "saddlebrown"

# colors for gradients

cmap2 = ck.cmap2

colors_palette = ck.colors_palette

# set the maps

bassin_map = "resources/maps/zurichsee_scaled.jpeg"

# top level aggregation

top = "All survey areas"

# define the feature level and components

this_feature = {'slug':'bt', 'name':"Zurichsee", 'level':'water_name_slug'}

this_level = 'city'

this_bassin = "linth"

bassin_label ="Linth survey area"

lakes_of_interest = ["zurichsee"]

# explanatory variables:

luse_exp = ["% buildings", "% recreation", "% agg", "% woods", "streets km", "intersects"]

# common aggregations

agg_pcs_quantity = {unit_label:"sum", "quantity":"sum"}

agg_pcs_median = {unit_label:"median", "quantity":"sum"}

# aggregation of dimensional data

agg_dims = {"total_w":"sum", "mac_plast_w":"sum", "area":"sum", "length":"sum"}

# columns needed

use_these_cols = ["loc_date" ,

"% to buildings",

"% to trans",

"% to recreation",

"% to agg",

"% to woods",

"population",

this_level,

"streets km",

"intersects",

"length",

"groupname",

"code"

]

# get your data:

dfBeaches = pd.read_csv("resources/beaches_with_land_use_rates.csv")

dfCodes = pd.read_csv("resources/codes_with_group_names_2015.csv")

dfDims = pd.read_csv("resources/corrected_dims.csv")

# set the index of the beach data to location slug

dfBeaches.set_index("slug", inplace=True)

# make a map to city names

city_map = dfBeaches.city

# map water_name_slug to water_name

wname_wname = dfBeaches[["water_name_slug","water_name"]].reset_index(drop=True).drop_duplicates().set_index("water_name_slug")

dfCodes.set_index("code", inplace=True)

codes_to_change = [

["G74", "description", "Insulation foams"],

["G940", "description", "Foamed EVA for crafts and sports"],

["G96", "description", "Sanitary-pads/tampons, applicators"],

["G178", "description", "Metal bottle caps and lids"],

["G82", "description", "Expanded foams 2.5cm - 50cm"],

["G81", "description", "Expanded foams .5cm - 2.5cm"],

["G117", "description", "Expanded foams < 5mm"],

["G75", "description", "Plastic/foamed polystyrene 0 - 2.5cm"],

["G76", "description", "Plastic/foamed polystyrene 2.5cm - 50cm"],

["G24", "description", "Plastic lid rings"],

["G33", "description", "Lids for togo drinks plastic"],

["G3", "description", "Plastic bags, carier bags"],

["G204", "description", "Bricks, pipes not plastic"],

["G904", "description", "Plastic fireworks"],

["G211", "description", "Swabs, bandaging, medical"],

]

for x in codes_to_change:

dfCodes = sut.shorten_the_value(x, dfCodes)

# the surveyor designated the object as aluminum instead of metal

dfCodes.loc["G708", "material"] = "Metal"

# make a map to the code descriptions

code_description_map = dfCodes.description

# make a map to the code materials

code_material_map = dfCodes.material

13. Zurichsee¶

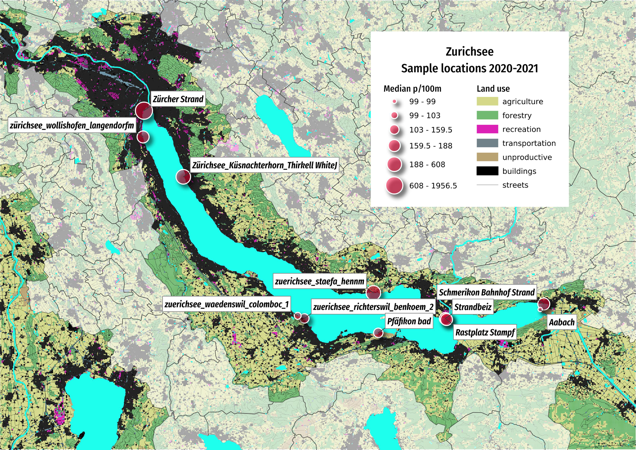

Below: Map of survey locations March 2020 - May 2021. Marker diameter = the mean survey result in pieces of litter per 100 meters (p/100m).

13.1. Sample locations¶

# this is the data before the expanded foams and fragmented plastics are aggregated to Gfrags and Gfoams

before_agg = pd.read_csv("resources/checked_before_agg_sdata_eos_2020_21.csv")

# this is the aggregated survey data that is being used

# a_data is all the data in the survey period

a_data = pd.read_csv(F"resources/checked_sdata_eos_2020_21.csv")

a_data["date"] = pd.to_datetime(a_data.date)

a_data.rename(columns={"% to agg":"% ag", "% to recreation": "% recreation", "% to woods":"% woods", "% to buildings":"% buildings"}, inplace=True)

luse_exp = ["% buildings", "% recreation", "% ag", "% woods", "streets km", "intersects"]

fd = sut.feature_data(a_data, this_feature["level"], these_features=lakes_of_interest)

# cumulative statistics for each code

code_totals = sut.the_aggregated_object_values(fd, agg=agg_pcs_median, description_map=code_description_map, material_map=code_material_map)

# daily survey totals

dt_all = fd.groupby(["loc_date","location",this_level, "date"], as_index=False).agg(agg_pcs_quantity )

# the materials table

fd_mat_totals = sut.the_ratio_object_to_total(code_totals)

# summary statistics, nsamples, nmunicipalities, names of citys, population

t = sut.make_table_values(fd, col_nunique=["location", "loc_date", "city"], col_sum=["quantity"], col_median=[])

# make a map to the population values for each survey location/city

fd_pop_map = dfBeaches.loc[fd.location.unique()][["city", "population"]].copy()

fd_pop_map.drop_duplicates(inplace=True)

# update t with the population data

t.update(sut.make_table_values(fd_pop_map, col_nunique=["city"], col_sum=["population"], col_median=[]))

# update t with the list of locations from fd

t.update({"locations":fd.location.unique()})

# join the strings into comma separated list

obj_string = "{:,}".format(t["quantity"])

surv_string = "{:,}".format(t["loc_date"])

pop_string = "{:,}".format(int(t["population"]))

# make strings

date_quantity_context = F"For the period between {start_date[:-3]} and {end_date[:-3]}, a total of {obj_string } objects were removed and identified over the course of {surv_string} surveys."

geo_context = F"The {this_feature['name']} results include {t['location']} different locations in {t['city']} different municipalities with a combined population of approximately {pop_string}."

munis_joined = ", ".join(sorted(fd_pop_map["city"]))

# put that all together:

lake_string = F"""

{date_quantity_context} {geo_context }

*{this_feature["name"]} municipalities:*\n\n>{munis_joined}

"""

md(lake_string)

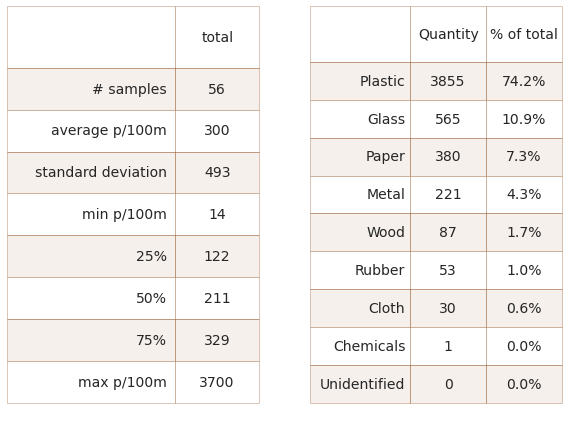

For the period between 2020-03 and 2021-05, a total of 5,192 objects were removed and identified over the course of 56 surveys. The Zurichsee results include 11 different locations in 7 different municipalities with a combined population of approximately 504,874.

Zurichsee municipalities:

Freienbach, Küsnacht (ZH), Rapperswil-Jona, Richterswil, Schmerikon, Stäfa, Zürich

13.1.1. Cumulative totals by municipality¶

dims_parameters = dict(this_level=this_level,

locations=fd.location.unique(),

start_end=start_end,

city_map=city_map,

agg_dims=agg_dims)

dims_table = sut.gather_dimensional_data(dfDims, **dims_parameters)

# a map of total quantity for each component

q_map = fd.groupby(this_level).quantity.sum()

# assgin the quantity and sample numbers to the dims table

for name in dims_table.index:

dims_table.loc[name, "samples"] = fd[fd[this_level] == name].loc_date.nunique()

dims_table.loc[name, "quantity"] = q_map[name]

# make a total column for the top feature data, the sum of all the components

dims_table.loc[this_feature["name"]]= dims_table.sum(numeric_only=True, axis=0)

# change the column names to user friendly syle

dims_table.rename(columns=sut.update_dictionary(sut.dims_table_columns), inplace=True)

# format the numercial data

dims_table.sort_values(by=["items"], ascending=False, inplace=True)

# change to formatted strings

dims_table["plastic kg"] = dims_table["plastic kg"]/1000

dims_table[["m²", "meters", "samples", "items"]] = dims_table[["m²", "meters", "samples", "items"]].applymap(lambda x: "{:,}".format(int(x)))

dims_table[["plastic kg", "total kg"]] = dims_table[["plastic kg", "total kg"]].applymap(lambda x: "{:.2f}".format(x))

# figure caption

agg_caption = F"""

*__Below:__ The cumulative weights and measures for {this_feature["name"]} and municipalities*

"""

md(agg_caption)

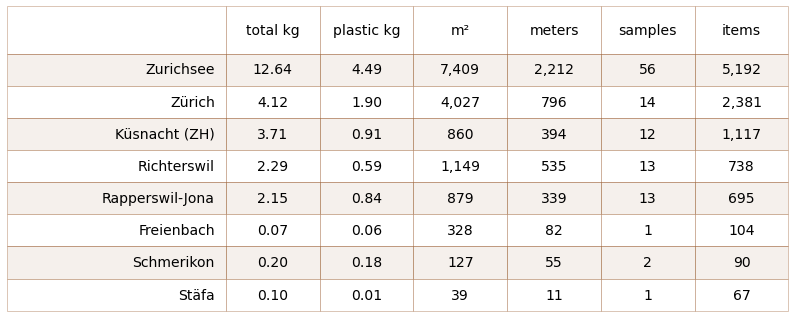

Below: The cumulative weights and measures for Zurichsee and municipalities

# make table

data = dims_table.reset_index()

colLabels = data.columns

fig, ax = plt.subplots(figsize=(len(colLabels)*2,len(data)*.7))

sut.hide_spines_ticks_grids(ax)

table_one = sut.make_a_table(ax, data.values, colLabels=colLabels, colWidths=[.28, *[.12]*6], a_color="saddlebrown", )

table_one.get_celld()[(0,0)].get_text().set_text(" ")

plt.show()

plt.tight_layout()

plt.close()

13.1.2. Distribution of Survey results¶

# the feature surveys to chart

fd_dindex = dt_all.set_index("date")

# all the other surveys

ots = dict(level_to_exclude=this_feature["level"], components_to_exclude=fd[this_feature["level"]].unique())

dts_date = sut.the_other_surveys(a_data, **ots)

# group the outher surveys by date and total pcs_m

ots_params = dict(agg_this = {unit_label:"sum"}, these_columns = ["loc_date","date"])

dts_date = sut.group_these_columns(dts_date, **ots_params)

# get the monthly or quarterly results for the feature

resample_plot, rate = sut.quarterly_or_monthly_values(fd_dindex , this_feature["name"], vals=unit_label, quarterly=["ticino"])

# scale the chart as needed to accomodate for extreme values

y_lim = 98

y_limit = np.percentile(dts_date[unit_label], y_lim)

# label for the chart that alerts to the scale

not_included = F"Values greater than {round(y_limit, 1)}{unit_label} not shown."

# figure caption

chart_notes = F"""

*__Left:__ {this_feature['name']}, {start_date[:7]} through {end_date[:7]}, n={t["loc_date"]}. {not_included} __Right:__ {this_feature['name']} empirical cumulative distribution of survey results.*

"""

md(chart_notes )

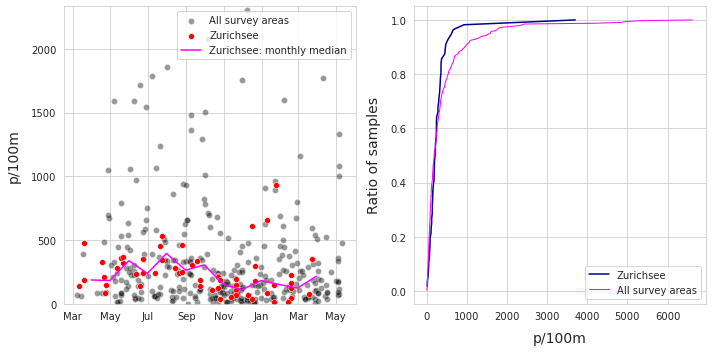

Left: Zurichsee, 2020-03 through 2021-05, n=56. Values greater than 2332.8p/100m not shown. Right: Zurichsee empirical cumulative distribution of survey results.

# months locator, can be confusing

# https://matplotlib.org/stable/api/dates_api.html

# months = mdates.MonthLocator(interval=1)

months_fmt = mdates.DateFormatter("%b")

days = mdates.DayLocator(interval=7)

sns.set_style("whitegrid")

fig, axs = plt.subplots(1,2, figsize=(10,5))

# the survey totals by day

ax = axs[0]

# feature surveys

sns.scatterplot(data=dts_date, x=dts_date.index, y=unit_label, label=top, color="black", alpha=0.4, ax=ax)

# all other surveys

sns.scatterplot(data=fd_dindex, x=fd_dindex.index, y=unit_label, label=this_feature["name"], color="red", s=34, ec="white", ax=ax)

# monthly or quaterly plot

sns.lineplot(data=resample_plot, x=resample_plot.index, y=resample_plot, label=F"{this_feature['name']}: {rate} median", color="magenta", ax=ax)

ax.set_ylim(0,y_limit )

ax.set_ylabel(unit_label, **ck.xlab_k14)

ax.set_xlabel("")

ax.xaxis.set_minor_locator(days)

ax.xaxis.set_major_formatter(months_fmt)

ax.legend()

# the cumlative distributions:

axtwo = axs[1]

# the feature of interest

feature_ecd = ECDF(dt_all[unit_label].values)

sns.lineplot(x=feature_ecd.x, y=feature_ecd.y, color="darkblue", ax=axtwo, label=this_feature["name"])

# the other features

other_features = ECDF(dts_date[unit_label].values)

sns.lineplot(x=other_features.x, y=other_features.y, color="magenta", label=top, linewidth=1, ax=axtwo)

axtwo.set_xlabel(unit_label, **ck.xlab_k14)

axtwo.set_ylabel("Ratio of samples", **ck.xlab_k14)

plt.tight_layout()

plt.show()

13.1.3. Summary data and material types¶

Left: Zurichsee summary of survey totals. Right: Zurichsee material type and percent of total

# get the basic statistics from pd.describe

cs = dt_all[unit_label].describe().round(2)

# change the names

csx = sut.change_series_index_labels(cs, sut.create_summary_table_index(unit_label, lang="EN"))

combined_summary = sut.fmt_combined_summary(csx, nf=[])

fd_mat_totals = sut.fmt_pct_of_total(fd_mat_totals)

fd_mat_totals = sut.make_string_format(fd_mat_totals)

# applly new column names for printing

cols_to_use = {"material":"Material","quantity":"Quantity", "% of total":"% of total"}

fd_mat_t = fd_mat_totals[cols_to_use.keys()].values

# make tables

fig, axs = plt.subplots(1,2, figsize=(8,6))

# summary table

# names for the table columns

a_col = [this_feature["name"], "total"]

axone = axs[0]

sut.hide_spines_ticks_grids(axone)

table_two = sut.make_a_table(axone, combined_summary, colLabels=a_col, colWidths=[.5,.25,.25], bbox=[0,0,1,1], **{"loc":"lower center"})

table_two.get_celld()[(0,0)].get_text().set_text(" ")

# material table

axtwo = axs[1]

axtwo.set_xlabel(" ")

sut.hide_spines_ticks_grids(axtwo)

table_three = sut.make_a_table(axtwo, fd_mat_t, colLabels=list(cols_to_use.values()), colWidths=[.4, .3,.3], bbox=[0,0,1,1], **{"loc":"lower center"})

table_three.get_celld()[(0,0)].get_text().set_text(" ")

plt.tight_layout()

plt.subplots_adjust(wspace=0.2)

plt.show()

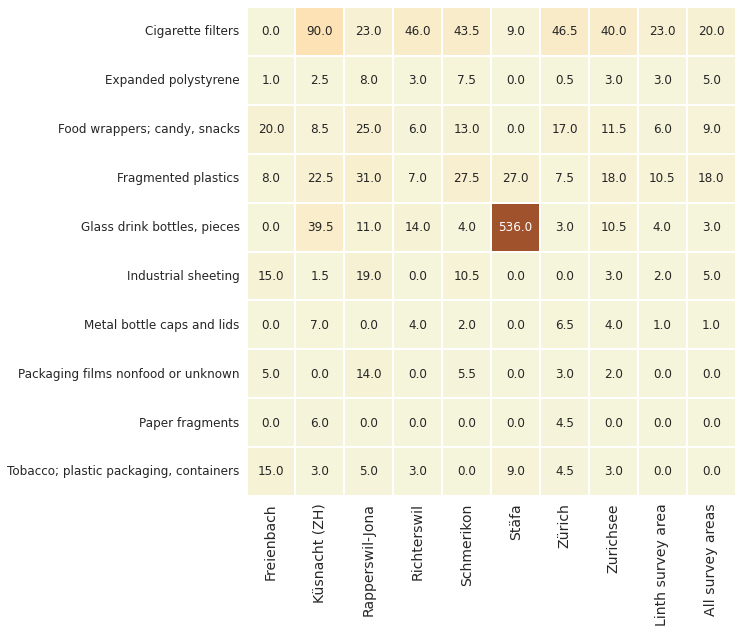

13.2. The most common objects¶

The most common objects are the ten most abundant by quantity AND/OR objects identified in at least 50% of all surveys.

# the top ten by quantity

most_abundant = code_totals.sort_values(by="quantity", ascending=False)[:10]

# the most common

most_common = code_totals[code_totals["fail rate"] >= a_fail_rate].sort_values(by="quantity", ascending=False)

# merge with most_common and drop duplicates

m_common = pd.concat([most_abundant, most_common]).drop_duplicates()

# get percent of total

m_common_percent_of_total = m_common.quantity.sum()/code_totals.quantity.sum()

# figure caption

rb_string = F"""

*__Below:__ {this_feature['name']} most common objects: fail rate >/= {a_fail_rate}% and/or top ten by quantity. Combined, the most abundant objects represent {int(m_common_percent_of_total*100)}% of all objects found.

Note : {unit_label} = median survey value.*

"""

md(rb_string)

Below: Zurichsee most common objects: fail rate >/= 50% and/or top ten by quantity. Combined, the most abundant objects represent 65% of all objects found. Note : p/100m = median survey value.

# format values for table

m_common["item"] = m_common.index.map(lambda x: code_description_map.loc[x])

m_common["% of total"] = m_common["% of total"].map(lambda x: F"{x}%")

m_common["quantity"] = m_common.quantity.map(lambda x: "{:,}".format(x))

m_common["fail rate"] = m_common["fail rate"].map(lambda x: F"{x}%")

m_common[unit_label] = m_common[unit_label].map(lambda x: F"{round(x,1)}")

# final table data

cols_to_use = {"item":"Item","quantity":"Quantity", "% of total":"% of total", "fail rate":"Fail rate", unit_label:unit_label}

all_survey_areas = m_common[cols_to_use.keys()].values

colWidths=[.52, .12, .12, .12, .12]

bbox=[0,0,1,1],

kwargs = {"loc":"lower center"}

colLabels=list(cols_to_use.values())

figsize=(len(colLabels)*2,len(all_survey_areas)*.7)

fig, axs = plt.subplots(figsize=figsize)

sut.hide_spines_ticks_grids(axs)

table_four = sut.make_a_table(axs, all_survey_areas, colLabels=colLabels, colWidths=colWidths, a_color="saddlebrown")

table_four.get_celld()[(0,0)].get_text().set_text(" ")

plt.tight_layout()

plt.show()

13.2.1. Most common objects results by municipality¶

Below: Zurichsee most common objects: median p/100m

# aggregated survey totals for the most common codes for all the water features

m_common_st = fd[fd.code.isin(m_common.index)].groupby([this_level, "loc_date","code"], as_index=False).agg(agg_pcs_quantity)

m_common_ft = m_common_st.groupby([this_level, "code"], as_index=False)[unit_label].median()

# map the desctiption to the code

m_common_ft["item"] = m_common_ft.code.map(lambda x: code_description_map.loc[x])

# pivot that

m_c_p = m_common_ft[["item", this_level, unit_label]].pivot(columns=this_level, index="item")

# quash the hierarchal column index

m_c_p.columns = m_c_p.columns.get_level_values(1)

## the aggregated totals for the feature data

c = sut.aggregate_to_group_name(fd[fd.code.isin(m_common.index)], column="code", name=this_feature["name"], val="med")

m_c_p[this_feature["name"]]= sut.change_series_index_labels(c, {x:code_description_map.loc[x] for x in c.index})

# the aggregated totals of the survey area

c = sut.aggregate_to_group_name(a_data[(a_data.river_bassin == this_bassin)&(a_data.code.isin(m_common.index))], column="code", name=top, val="med")

m_c_p[bassin_label] = sut.change_series_index_labels(c, {x:code_description_map.loc[x] for x in c.index})

# the aggregated totals of all the data

c = sut.aggregate_to_group_name(a_data[(a_data.code.isin(m_common.index))], column="code", name=top, val="med")

m_c_p[top] = sut.change_series_index_labels(c, {x:code_description_map.loc[x] for x in c.index})

# chart that

fig, ax = plt.subplots(figsize=(len(m_c_p.columns)*.9,len(m_c_p)*.9))

axone = ax

sns.heatmap(m_c_p, ax=axone, cmap=cmap2, annot=True, annot_kws={"fontsize":12}, fmt=".1f", square=True, cbar=False, linewidth=.1, linecolor="white")

axone.set_xlabel("")

axone.set_ylabel("")

axone.tick_params(labelsize=14, which="both", axis="x")

axone.tick_params(labelsize=12, which="both", axis="y")

plt.setp(axone.get_xticklabels(), rotation=90)

plt.show()

plt.close()

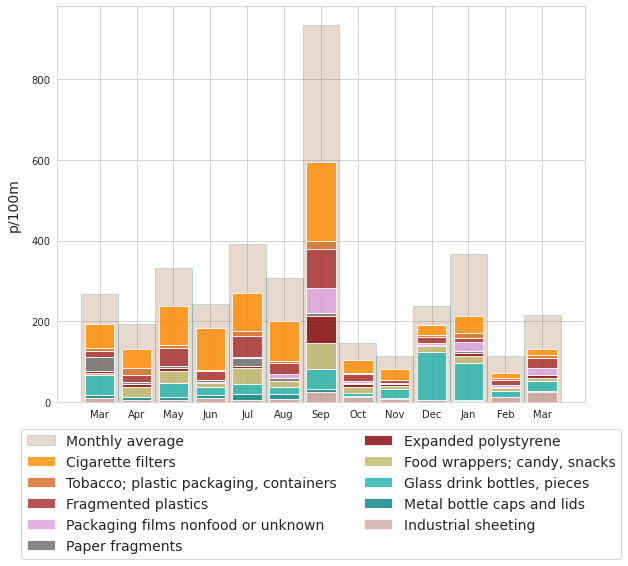

13.2.2. Most common objects monthly average¶

# collect the survey results of the most common objects

m_common_m = fd[(fd.code.isin(m_common.index))].groupby(["loc_date", "date", "code", "groupname"], as_index=False).agg(agg_pcs_quantity)

m_common_m.set_index("date", inplace=True)

# set the order of the chart, group the codes by groupname columns

an_order = m_common_m.groupby(["code", "groupname"], as_index=False).quantity.sum().sort_values(by="groupname")["code"].values

# a manager dict for the monthly results of each code

mgr = {}

# get the monhtly results for each code:

for a_group in an_order:

# resample by month

a_plot = m_common_m[(m_common_m.code==a_group)][unit_label].resample("M").mean().fillna(0)

this_group = {a_group:a_plot}

mgr.update(this_group)

monthly_mc = F"""

*__Below:__ {this_feature['name']}, monthly average survey result {unit_label}. Detail of the most common objects*

"""

md(monthly_mc)

Below: Zurichsee, monthly average survey result p/100m. Detail of the most common objects

# convenience function to lable x axis

def new_month(x):

if x <= 11:

this_month = x

else:

this_month=x-12

return this_month

months={

0:'Jan',

1:'Feb',

2:'Mar',

3:'Apr',

4:'May',

5:'Jun',

6:'Jul',

7:'Aug',

8:'Sep',

9:'Oct',

10:'Nov',

11:'Dec'

}

fig, ax = plt.subplots(figsize=(9,8))

# define a bottom

bottom = [0]*len(mgr["G27"])

# the monhtly survey average for all objects and locations

monthly_fd = fd.groupby(["loc_date", "date"], as_index=False).agg(agg_pcs_quantity)

monthly_fd.set_index("date", inplace=True)

m_fd = monthly_fd[unit_label].resample("M").mean().fillna(0)

# define the xaxis

this_x = [i for i,x in enumerate(m_fd.index)]

# plot the monthly total survey average

ax.bar(this_x, m_fd.to_numpy(), color=a_color, alpha=0.2, linewidth=1, edgecolor="teal", width=1, label="Monthly survey average")

# plot the monthly survey average of the most common objects

for i, a_group in enumerate(an_order):

# define the axis

this_x = [i for i,x in enumerate(mgr[a_group].index)]

# collect the month

this_month = [x.month for i,x in enumerate(mgr[a_group].index)]

# if i == 0 laydown the first bars

if i == 0:

ax.bar(this_x, mgr[a_group].to_numpy(), label=a_group, color=colors_palette[a_group], linewidth=1, alpha=0.6 )

# else use the previous results to define the bottom

else:

bottom += mgr[an_order[i-1]].to_numpy()

ax.bar(this_x, mgr[a_group].to_numpy(), bottom=bottom, label=a_group, color=colors_palette[a_group], linewidth=1, alpha=0.8)

# collect the handles and labels from the legend

handles, labels = ax.get_legend_handles_labels()

# set the location of the x ticks

ax.xaxis.set_major_locator(ticker.FixedLocator([i for i in np.arange(len(this_x))]))

#label the xticks by month

axisticks = ax.get_xticks()

labelsx = [months[new_month(x-1)] for x in this_month]

plt.xticks(ticks=axisticks, labels=labelsx)

ax.set_ylabel(unit_label, **ck.xlab_k14)

# make the legend

# swap out codes for descriptions

new_labels = [code_description_map.loc[x] for x in labels[1:]]

new_labels = new_labels[::-1]

# insert a label for the monthly average

new_labels.insert(0,"Monthly average")

handles = [handles[0], *handles[1:][::-1]]

plt.legend(handles=handles, labels=new_labels, bbox_to_anchor=(.5, -.05), loc="upper center", ncol=2, fontsize=14)

plt.tight_layout()

plt.show()

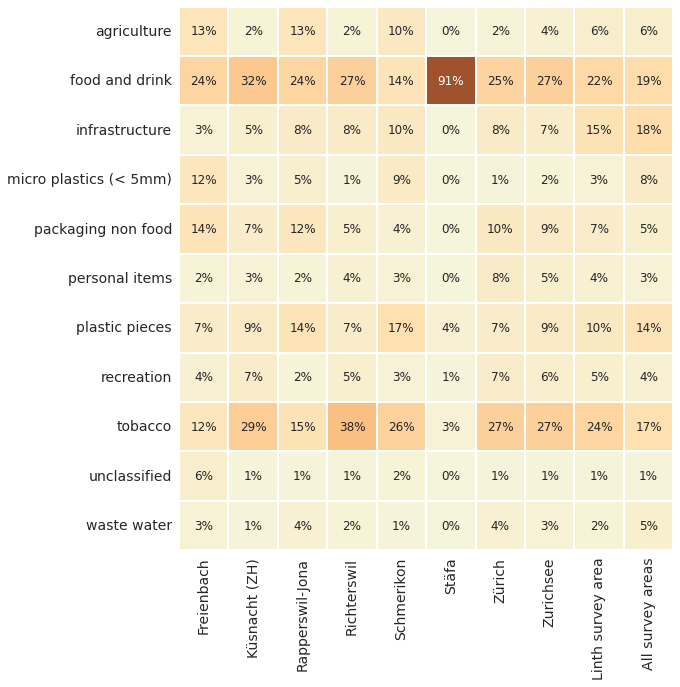

13.3. Utility of the objects found¶

The utility type is based on the utilization of the object prior to it being discarded or object description if the original use is undetermined. Identified objects are classified into one of 260 predefined categories. The categories are grouped according to utilization or item description.

wastewater: items released from water treatment plants includes items likely toilet flushed

micro plastics (< 5mm): fragmented plastics and pre-production plastic resins

infrastructure: items related to construction and maintenance of buildings, roads and water/power supplies

food and drink: all materials related to consuming food and drink

agriculture: primarily industrial sheeting i.e., mulch and row covers, greenhouses, soil fumigation, bale wraps. Includes hard plastics for agricultural fencing, flowerpots etc.

tobacco: primarily cigarette filters, includes all smoking related material

recreation: objects related to sports and leisure i.e., fishing, hunting, hiking etc.

packaging non food and drink: packaging material not identified as food, drink nor tobacco related

plastic fragments: plastic pieces of undetermined origin or use

personal items: accessories, hygiene and clothing related

See the annex for the complete list of objects identified, includes descriptions and group classification. The section Code groups describes each code group in detail and provides a comprehensive list of all objects in a group.

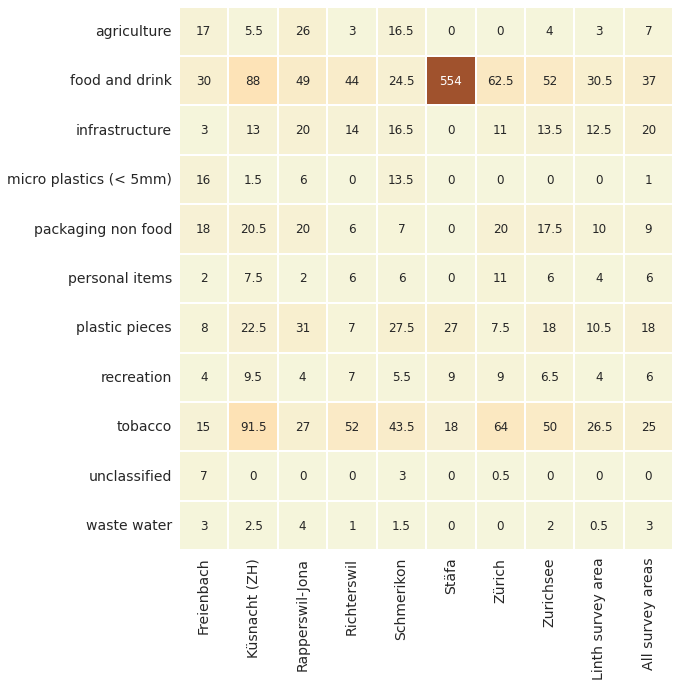

Below: Zurichsee utility of objects found % of total by municipality. Fragmented objects with no clear identification remain classified by size:

# code groups resluts aggregated by survey

groups = ["loc_date","groupname"]

cg_t = fd.groupby([this_level,*groups], as_index=False).agg(agg_pcs_quantity)

# the total per water feature

cg_tq = cg_t.groupby(this_level).quantity.sum()

# get the fail rates for each group per survey

cg_t["fail"]=False

cg_t["fail"] = cg_t.quantity.where(lambda x: x == 0, True)

# aggregate all that for each municipality

agg_this = {unit_label:"median", "quantity":"sum", "fail":"sum", "loc_date":"nunique"}

cg_t = cg_t.groupby([this_level, "groupname"], as_index=False).agg(agg_this)

# assign survey area total to each record

for a_feature in cg_tq.index:

cg_t.loc[cg_t[this_level] == a_feature, "f_total"] = cg_tq.loc[a_feature]

# get the percent of total for each group for each survey area

cg_t["pt"] = (cg_t.quantity/cg_t.f_total).round(2)

# pivot that

data_table = cg_t.pivot(columns=this_level, index="groupname", values="pt")

# aggregated values for the lake

data_table[this_feature["name"]]= sut.aggregate_to_group_name(fd, unit_label=unit_label, column="groupname", name=bassin_label, val="pt")

# repeat for the survey area

data_table[bassin_label] = sut.aggregate_to_group_name(a_data[a_data.river_bassin == this_bassin], unit_label=unit_label, column="groupname", name=bassin_label, val="pt")

# repeat for all the data

data_table[top] = sut.aggregate_to_group_name(a_data, unit_label=unit_label, column="groupname", name=top, val="pt")

data = data_table

fig, ax = plt.subplots(figsize=(11,10))

axone = ax

sns.heatmap(data , ax=axone, cmap=cmap2, annot=True, annot_kws={"fontsize":12}, cbar=False, fmt=".0%", linewidth=.1, square=True, linecolor="white")

axone.set_ylabel("")

axone.set_xlabel("")

axone.tick_params(labelsize=14, which="both", axis="both", labeltop=False, labelbottom=True)

plt.setp(axone.get_xticklabels(), rotation=90, fontsize=14)

plt.setp(axone.get_yticklabels(), rotation=0, fontsize=14)

plt.show()

cg_medpcm = F"""

<br></br>

*__Below:__ {this_feature['name']} utility of objects found median {unit_label}. Fragmented objects with no clear identification remain classified by size:*

"""

md(cg_medpcm)

Below: Zurichsee utility of objects found median p/100m. Fragmented objects with no clear identification remain classified by size:

# median p/50m of all the water features

data_table = cg_t.pivot(columns=this_level, index="groupname", values=unit_label)

# aggregated values for the lake

data_table[this_feature["name"]]= sut.aggregate_to_group_name(fd, unit_label=unit_label, column="groupname", name=bassin_label, val="med")

# the survey area columns

data_table[bassin_label] = sut.aggregate_to_group_name(a_data[a_data.river_bassin == this_bassin], unit_label=unit_label, column="groupname", name=bassin_label, val="med")

# column for all the surveys

data_table[top] = sut.aggregate_to_group_name(a_data, unit_label=unit_label, column="groupname", name=top, val="med")

# merge with data_table

data = data_table

fig, ax = plt.subplots(figsize=(11,10))

axone = ax

sns.heatmap(data , ax=axone, cmap=cmap2, annot=True, annot_kws={"fontsize":12}, fmt="g", cbar=False, linewidth=.1, square=True, linecolor="white")

axone.set_xlabel("")

axone.set_ylabel("")

axone.tick_params(labelsize=14, which="both", axis="both", labeltop=False, labelbottom=True)

plt.setp(axone.get_xticklabels(), rotation=90, fontsize=14)

plt.setp(axone.get_yticklabels(), rotation=0, fontsize=14)

plt.show()

13.4. Annex¶

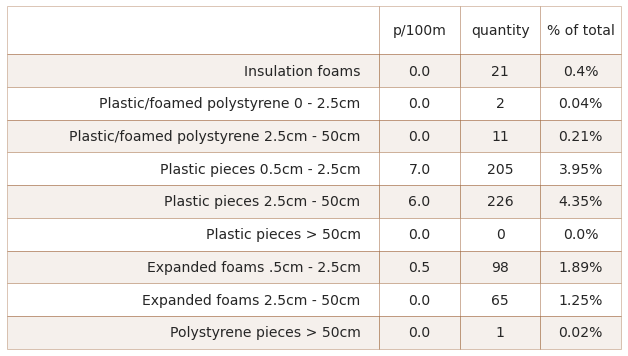

13.4.1. Fragmented foams and plastics by size¶

The table below contains the “Gfoam” and “Gfrags” components grouped for analysis. Objects labeled expanded foams are grouped as Gfoam and includes all expanded polystyrene foamed plastics > 0.5 cm. Plastic pieces and objects made of combined plastic and foamed plastic materials > 0.5 cm. are grouped for analysis as Gfrags.

Below: Zurichsee fragmented foams and plastics by size group.

# collect the data before aggregating foams for all locations in the survey area

# the codes for the foams

some_foams = ["G81", "G82", "G83", "G74"]

# the codes for the fragmented plastics

some_frag_plas = list(before_agg[before_agg.groupname == "plastic pieces"].code.unique())

# aggregate all the codes by loc_date and get the total quantity and the median pcs/m

fd_frags_foams = before_agg[(before_agg.code.isin([*some_frag_plas, *some_foams]))&(before_agg.location.isin(t["locations"]))].groupby(["loc_date","code"], as_index=False).agg(agg_pcs_quantity)

fd_frags_foams = fd_frags_foams.groupby("code").agg({unit_label:"median", "quantity":"sum"})

# add code description and format for printing

fd_frags_foams["item"] = fd_frags_foams.index.map(lambda x: code_description_map.loc[x])

fd_frags_foams["% of total"] = (fd_frags_foams.quantity/fd.quantity.sum()*100).round(2)

fd_frags_foams["% of total"] = fd_frags_foams["% of total"].map(lambda x: F"{x}%")

fd_frags_foams["quantity"] = fd_frags_foams["quantity"].map(lambda x: F"{x:,}")

# table data

data = fd_frags_foams[["item", unit_label, "quantity", "% of total"]]

fig, axs = plt.subplots(figsize=(11,len(data)*.7))

sut.hide_spines_ticks_grids(axs)

a_table = sut.make_a_table(axs, data.values, colLabels=data.columns, colWidths=[.6, .13, .13, .13])

a_table.get_celld()[(0,0)].get_text().set_text(" ")

plt.show()

plt.tight_layout()

plt.close()

13.4.2. The survey locations¶

# display the survey locations

disp_columns = ["latitude", "longitude", "city"]

disp_beaches = dfBeaches.loc[t["locations"]][disp_columns]

disp_beaches.reset_index(inplace=True)

disp_beaches.rename(columns={"slug":"location"}, inplace=True)

disp_beaches.set_index("location", inplace=True, drop=True)

disp_beaches

| latitude | longitude | city | |

|---|---|---|---|

| location | |||

| rastplatz-stampf | 47.215177 | 8.844286 | Rapperswil-Jona |

| zuerichsee_richterswil_benkoem_2 | 47.217646 | 8.698713 | Richterswil |

| pfafikon-bad | 47.206766 | 8.774182 | Freienbach |

| zurichsee_kusnachterhorn_thirkell-whitej | 47.317685 | 8.576860 | Küsnacht (ZH) |

| zurichsee_wollishofen_langendorfm | 47.345890 | 8.536155 | Zürich |

| zurcher-strand | 47.364200 | 8.537420 | Zürich |

| zuerichsee_staefa_hennm | 47.234643 | 8.769881 | Stäfa |

| zuerichsee_waedenswil_colomboc_1 | 47.219547 | 8.691961 | Richterswil |

| schmerikon-bahnhof-strand | 47.224780 | 8.944180 | Schmerikon |

| aabach | 47.220989 | 8.940365 | Schmerikon |

| strandbeiz | 47.215862 | 8.843098 | Rapperswil-Jona |

13.4.3. Inventory of items¶

pd.set_option("display.max_rows", None)

complete_inventory = code_totals[code_totals.quantity>0][["item", "groupname", "quantity","% of total","fail rate"]]

complete_inventory.sort_values(by="quantity", ascending=False)

| item | groupname | quantity | % of total | fail rate | |

|---|---|---|---|---|---|

| code | |||||

| G27 | Cigarette filters | tobacco | 1249 | 24.06 | 94 |

| G200 | Glass drink bottles, pieces | food and drink | 496 | 9.55 | 80 |

| Gfrags | Fragmented plastics | plastic pieces | 444 | 8.55 | 85 |

| G30 | Food wrappers; candy, snacks | food and drink | 363 | 6.99 | 94 |

| G941 | Packaging films nonfood or unknown | packaging non food | 187 | 3.60 | 51 |

| Gfoam | Expanded polystyrene | infrastructure | 164 | 3.16 | 67 |

| G67 | Industrial sheeting | agriculture | 147 | 2.83 | 53 |

| G25 | Tobacco; plastic packaging, containers | tobacco | 139 | 2.68 | 67 |

| G178 | Metal bottle caps and lids | food and drink | 124 | 2.39 | 62 |

| G156 | Paper fragments | packaging non food | 102 | 1.96 | 48 |

| G73 | Foamed items & pieces (non packaging/insulatio... | recreation | 87 | 1.68 | 14 |

| G96 | Sanitary-pads/tampons, applicators | waste water | 82 | 1.58 | 12 |

| G923 | Tissue, toilet paper, napkins, paper towels | personal items | 82 | 1.58 | 30 |

| G74 | Insulation foams | infrastructure | 71 | 1.37 | 41 |

| G177 | Foil wrappers, aluminum foil | food and drink | 66 | 1.27 | 44 |

| G155 | Fireworks paper tubes and fragments | recreation | 64 | 1.23 | 16 |

| G211 | Swabs, bandaging, medical | personal items | 58 | 1.12 | 33 |

| G112 | Industrial pellets (nurdles) | micro plastics (< 5mm) | 52 | 1.00 | 26 |

| G24 | Plastic lid rings | food and drink | 51 | 0.98 | 39 |

| G21 | Drink lids | food and drink | 48 | 0.92 | 41 |

| G31 | Lollypop sticks | food and drink | 43 | 0.83 | 39 |

| G23 | Lids unidentified | packaging non food | 42 | 0.81 | 35 |

| G928 | Ribbons and bows | personal items | 41 | 0.79 | 16 |

| G153 | Cups, food containers, wrappers (paper) | food and drink | 40 | 0.77 | 30 |

| G904 | Plastic fireworks | recreation | 37 | 0.71 | 28 |

| G89 | Plastic construction waste | infrastructure | 36 | 0.69 | 37 |

| G148 | Cardboard (boxes and fragments) | packaging non food | 35 | 0.67 | 19 |

| G159 | Corks | food and drink | 34 | 0.65 | 23 |

| G95 | Cotton bud/swab sticks | waste water | 32 | 0.62 | 33 |

| G10 | Food containers single use foamed or plastic | food and drink | 28 | 0.54 | 17 |

| G125 | Balloons and balloon sticks | recreation | 28 | 0.54 | 25 |

| G50 | String < 1cm | recreation | 25 | 0.48 | 30 |

| G35 | Straws and stirrers | food and drink | 24 | 0.46 | 32 |

| G203 | Tableware ceramic or glass, cups, plates, pieces | food and drink | 23 | 0.44 | 16 |

| G117 | Expanded foams < 5mm | micro plastics (< 5mm) | 23 | 0.44 | 10 |

| G165 | Ice cream sticks, toothpicks, chopsticks | food and drink | 23 | 0.44 | 17 |

| G91 | Biomass holder | waste water | 22 | 0.42 | 19 |

| G32 | Toys and party favors | recreation | 22 | 0.42 | 25 |

| G922 | Labels, bar codes | packaging non food | 22 | 0.42 | 21 |

| G87 | Tape, masking/duct/packing | infrastructure | 21 | 0.40 | 25 |

| G66 | Straps/bands; hard, plastic package fastener | infrastructure | 20 | 0.39 | 26 |

| G940 | Foamed EVA for crafts and sports | recreation | 19 | 0.37 | 10 |

| G157 | Paper | packaging non food | 18 | 0.35 | 7 |

| G905 | Hair clip, hair ties, personal accessories pl... | personal items | 17 | 0.33 | 14 |

| G146 | Paper, cardboard | packaging non food | 17 | 0.33 | 16 |

| G106 | Plastic fragments angular <5mm | micro plastics (< 5mm) | 16 | 0.31 | 12 |

| G208 | Glass or ceramic fragments > 2.5 cm | unclassified | 16 | 0.31 | 12 |

| G131 | Rubber bands | personal items | 16 | 0.31 | 21 |

| G98 | Diapers - wipes | waste water | 13 | 0.25 | 17 |

| G33 | Lids for togo drinks plastic | food and drink | 12 | 0.23 | 17 |

| G942 | Plastic shavings from lathes, CNC machining | unclassified | 11 | 0.21 | 12 |

| G135 | Clothes, footware, headware, gloves | personal items | 11 | 0.21 | 8 |

| G3 | Plastic bags, carier bags | packaging non food | 11 | 0.21 | 8 |

| G34 | Cutlery, plates and trays | food and drink | 11 | 0.21 | 17 |

| G186 | Industrial scrap | infrastructure | 10 | 0.19 | 16 |

| G100 | Medical; containers/tubes/ packaging | waste water | 10 | 0.19 | 10 |

| G921 | Ceramic tile and pieces | infrastructure | 10 | 0.19 | 10 |

| G201 | Jars, includes pieces | food and drink | 10 | 0.19 | 14 |

| G22 | Lids for chemicals, detergents (non-food) | infrastructure | 10 | 0.19 | 10 |

| G28 | Pens, lids, mechanical pencils etc. | personal items | 9 | 0.17 | 12 |

| G152 | Cigarette boxes, tobacco related paper/cardboard | tobacco | 9 | 0.17 | 12 |

| G149 | Paper packaging | packaging non food | 9 | 0.17 | 5 |

| G170 | Wood (processed) | agriculture | 8 | 0.15 | 5 |

| G167 | Matches or fireworks | recreation | 8 | 0.15 | 5 |

| G936 | Sheeting ag. greenhouse film | agriculture | 8 | 0.15 | 8 |

| G925 | Packets: desiccant/ moisture absorbers, plasti... | packaging non food | 7 | 0.13 | 10 |

| G59 | Fishing line monofilament (angling) | recreation | 7 | 0.13 | 12 |

| G93 | Cable ties; steggel, zip, zap straps | infrastructure | 7 | 0.13 | 12 |

| G142 | Rope , string or nets | recreation | 7 | 0.13 | 12 |

| G26 | Cigarette lighters | tobacco | 6 | 0.12 | 10 |

| G933 | Bags, cases for accessories; glasses, electron... | personal items | 6 | 0.12 | 7 |

| G210 | Other glass/ceramic | unclassified | 6 | 0.12 | 3 |

| G161 | Processed timber | agriculture | 6 | 0.12 | 8 |

| G4 | Small plastic bags; freezer, zip-lock etc. | packaging non food | 6 | 0.12 | 7 |

| G20 | Caps and lids | packaging non food | 5 | 0.10 | 5 |

| G90 | Plastic flower pots | agriculture | 5 | 0.10 | 5 |

| G191 | Wire and mesh | agriculture | 5 | 0.10 | 8 |

| G137 | Clothing, towels & rags | personal items | 5 | 0.10 | 8 |

| G124 | Other plastic or foam products | unclassified | 4 | 0.08 | 5 |

| G926 | Chewing gum, often contains plastics | food and drink | 4 | 0.08 | 5 |

| G929 | Electronics and pieces; sensors, headsets etc. | personal items | 4 | 0.08 | 7 |

| G908 | Tape; electrical, insulating | infrastructure | 4 | 0.08 | 7 |

| G171 | Other wood < 50cm | agriculture | 4 | 0.08 | 3 |

| G7 | Drink bottles < = 0.5L | food and drink | 4 | 0.08 | 7 |

| G48 | Rope, synthetic | recreation | 3 | 0.06 | 3 |

| G101 | Dog feces bag | personal items | 3 | 0.06 | 5 |

| G68 | Fiberglass fragments | infrastructure | 3 | 0.06 | 5 |

| G70 | Shotgun cartridges | recreation | 3 | 0.06 | 5 |

| G901 | Mask medical, synthetic | personal items | 3 | 0.06 | 5 |

| G119 | Sheetlike user plastic (>1mm) | micro plastics (< 5mm) | 3 | 0.06 | 1 |

| G916 | Pencils and pieces | personal items | 3 | 0.06 | 5 |

| G134 | Other rubber | unclassified | 3 | 0.06 | 3 |

| G175 | Cans, beverage | food and drink | 3 | 0.06 | 5 |

| G938 | Toothpicks, dental floss plastic | food and drink | 3 | 0.06 | 5 |

| G154 | Newspapers or magazines | personal items | 3 | 0.06 | 1 |

| G930 | Foam earplugs | personal items | 3 | 0.06 | 3 |

| G917 | Terracotta balls | unclassified | 2 | 0.04 | 1 |

| G927 | String trimmer line, used to cut grass, weeds,... | infrastructure | 2 | 0.04 | 3 |

| G939 | Flowers, plants plastic | personal items | 2 | 0.04 | 3 |

| G103 | Plastic fragments rounded <5mm | micro plastics (< 5mm) | 2 | 0.04 | 3 |

| G11 | Cosmetics for the beach, e.g. sunblock | recreation | 2 | 0.04 | 3 |

| G197 | Other metal | infrastructure | 2 | 0.04 | 3 |

| G108 | disk pellets <5mm | micro plastics (< 5mm) | 2 | 0.04 | 3 |

| G114 | Films <5mm | micro plastics (< 5mm) | 2 | 0.04 | 3 |

| G118 | Small industrial spheres <5mm | micro plastics (< 5mm) | 2 | 0.04 | 3 |

| G128 | Tires and belts | unclassified | 2 | 0.04 | 3 |

| G133 | Condoms incl. packaging | waste water | 2 | 0.04 | 1 |

| G138 | Shoes and sandals | personal items | 2 | 0.04 | 3 |

| G145 | Other textiles | personal items | 2 | 0.04 | 1 |

| G198 | Other metal pieces < 50cm | infrastructure | 2 | 0.04 | 3 |

| G2 | Bags | packaging non food | 2 | 0.04 | 1 |

| G204 | Bricks, pipes not plastic | infrastructure | 2 | 0.04 | 3 |

| G29 | Combs, brushes and sunglasses | personal items | 2 | 0.04 | 3 |

| G5 | Generic plastic bags | packaging non food | 2 | 0.04 | 1 |

| G43 | Tags fishing or industry (security tags, seals) | recreation | 2 | 0.04 | 3 |

| G107 | Cylindrical pellets < 5mm | micro plastics (< 5mm) | 2 | 0.04 | 1 |

| G914 | Paperclips, clothespins, plastic utility items | personal items | 1 | 0.02 | 1 |

| G39 | Gloves | personal items | 1 | 0.02 | 1 |

| G99 | Syringes - needles | personal items | 1 | 0.02 | 1 |

| G115 | Foamed plastic <5mm | micro plastics (< 5mm) | 1 | 0.02 | 1 |

| G12 | Cosmetics, non-beach use personal care containers | personal items | 1 | 0.02 | 1 |

| G943 | Fencing agriculture, plastic | agriculture | 1 | 0.02 | 1 |

| G126 | Balls | recreation | 1 | 0.02 | 1 |

| G900 | Gloves latex personal protective equipment | personal items | 1 | 0.02 | 1 |

| G40 | Gloves household/gardening | personal items | 1 | 0.02 | 1 |

| G902 | Mask medical, cloth | personal items | 1 | 0.02 | 1 |

| G139 | Backpacks | personal items | 1 | 0.02 | 1 |

| G140 | Bags, burlap, hessian, jute or hemp | agriculture | 1 | 0.02 | 1 |

| G935 | Walking stick pads and pieces, often elastomer... | personal items | 1 | 0.02 | 1 |

| G931 | Tape-caution for barrier, police, construction... | infrastructure | 1 | 0.02 | 1 |

| G913 | Pacifier | personal items | 1 | 0.02 | 1 |

| G147 | Paper bags | packaging non food | 1 | 0.02 | 1 |

| G173 | Other | unclassified | 1 | 0.02 | 1 |

| G176 | Cans, food | food and drink | 1 | 0.02 | 1 |

| G194 | Cables, metal wire(s) often inside rubber or p... | infrastructure | 1 | 0.02 | 1 |

| G195 | Batteries - household | personal items | 1 | 0.02 | 1 |

| G6 | Bottles and containers, plastic non food/drink | packaging non food | 1 | 0.02 | 1 |

| G38 | Coverings; plastic packaging, sheeting for pro... | unclassified | 1 | 0.02 | 1 |

| G109 | Flat pellets <5mm | micro plastics (< 5mm) | 1 | 0.02 | 1 |

| G111 | Spheruloid pellets < 5mm | micro plastics (< 5mm) | 1 | 0.02 | 1 |

| G919 | Nails, screws, bolts etc. | infrastructure | 1 | 0.02 | 1 |

| G918 | Safety pins, paper clips, small metal utility ... | personal items | 1 | 0.02 | 1 |

| G213 | Paraffin wax | recreation | 1 | 0.02 | 1 |

| G65 | Buckets | agriculture | 1 | 0.02 | 1 |